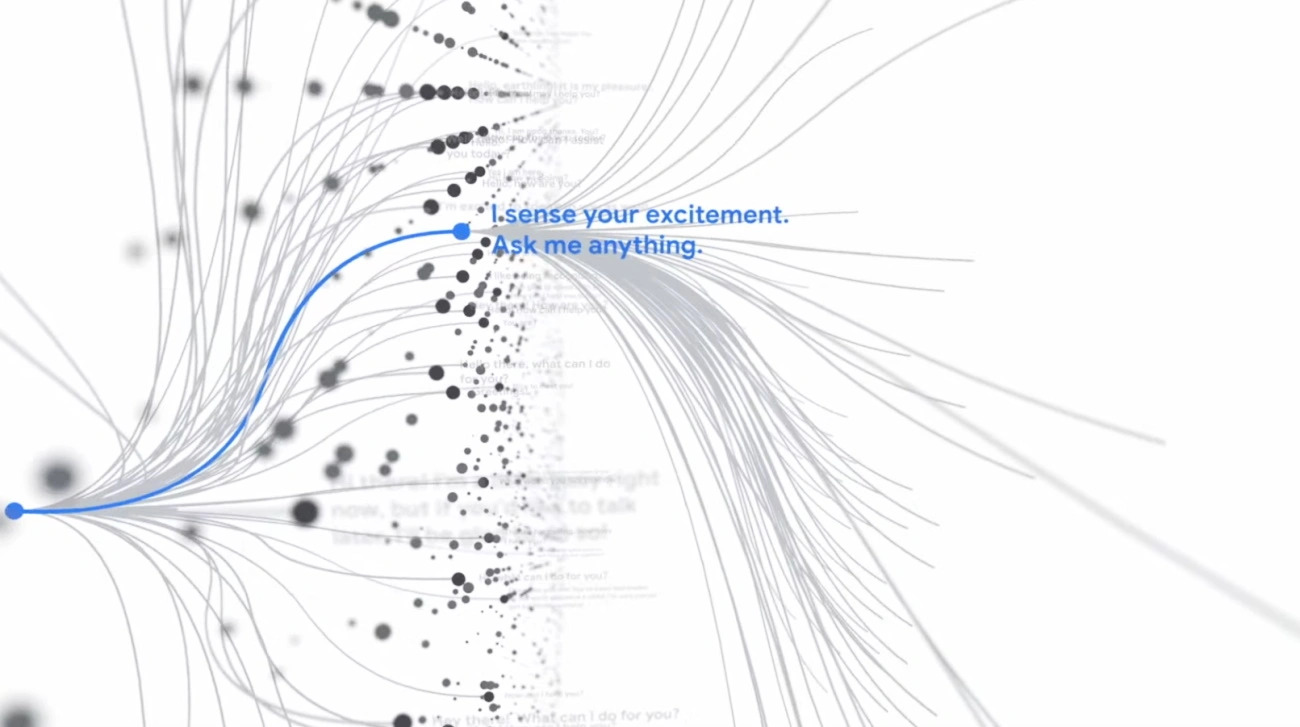

Artificial Intelligence (AI) has many uses: they help organize our day, improve how we take photos, and even READ MORE. While AI is usually based on a complex model, reports of an AI being sentient has caused a Google Engineer to be placed on administrative leave.

This occurred after Google Engineer Blake Lemoine revealed to the Washington Post on how LaMDA (Language Model for Dialogue Application), an AI chatbot that relies on Google’s language models, has become sentient. This led to Lemoine discussing his work and Google’s unethical activities around AI with a representative of the House judiciary committee.

Lemoine’s statement that the LaMDA AI has become sentient has led to him being placed on paid administrative leave by Google over breaching the company’s confidentiality agreement. This became an issue with Google because they claimed in their press release a year ago that LaMDA adheres to Google’s AI principles, and that Lemoine’s statement is provocative.

Google flatly denied Lemoine’s argument, stating that they reviewed Lemoine’s concerns and “informed him that the evidence does not support his claims.” Lemoine does not have proof to justify that LaMDA has reached sentience, and he admitted that his claims are based on his experience as a priest–and not as a scientist.

While we don’t get to see LaMDA thinking on its own and having its own consciousness (despite a transcript depicting that it might be sentient), we might be far off from seeing the likes of Ultron or HAL 9000 from 2001: A Space Odyssey–which we all know is purely science fiction–become a reality sometime in the future. That can be dangerous should it happen as we will never know how people can use or abuse sentient AI models.