While Filipinos are still furious about GMA News and its very robotic AI sportscasters at NCAA Season 99, OpenAI made a few upgrades to ChatGPT where it can now respond to the user through voice and photo prompts. True to their post update, ChatGPT can now see, hear, and speak with this new update. These updates make ChatGPT feel more like interacting with a normal person.

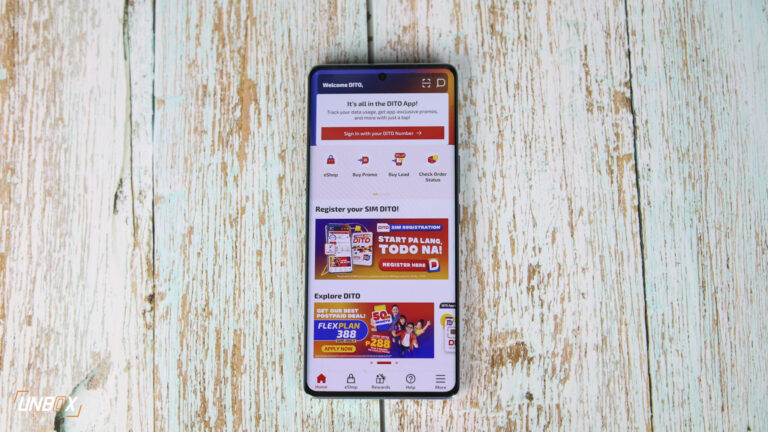

The photo prompt feature may be familiar, as it follows the same principle as how Google Lens works. You take a photo (or send one) to ChatGPT, and the AI assistant will figure out what you’re asking for and will respond accordingly. It’s better than Google Lens on paper as ChatGPT has a drawing tool that helps you refine your photo query so that it will give you a better answer.

The voice prompt, on the other hand, is based on its Whisper speech-to-text model. This time around, the update can generate “human-like audio from just text and a few seconds of sample speech.” This tool has a few good use cases: one of them is with Spotify, where OpenAI plans to use this tool to translate podcasts into different languages while retaining the original voice of the podcaster as much as possible.

Just like anything that involves AI, the voice and photo prompts bring in a new round of possible issues with ChatGPT. Similar to GMA News’ use of AI sportscasters, both upgrades are at risk of being abused by their users. With GMA insisting that they “also value training and upskilling our employees so they could be empowered in this age of AI,” OpenAI is taking the same cautious approach with the voice and image prompt abilities of ChatGPT.

OpenAI explained that it has “taken technical measures to significantly limit ChatGPT’s ability to analyze and make direct statements about people” to ensure the proper and ethical use of these new tools. ChatGPT still has its limitations, and OpenAI wants to make sure that people use these new tools responsibly.